As large language models (LLMs) continue to power more products, the need for constant improvement is essential to enhance the user experience. One of the most effective frameworks to evolve an LLM over time is through an "LLM feedback loop." This process involves gathering user feedback from LLM-user interactions and feeding it back to the LLM for iterative improvements.

In this guide, we’ll explain the LLM feedback loop, its key stages, and how you can leverage it to build better LLM-powered products. We'll also look at different ways to integrate user feedback and tools like Nebuly to manage the entire process, from data gathering to A/B testing.

What is the LLM Feedback Loop?

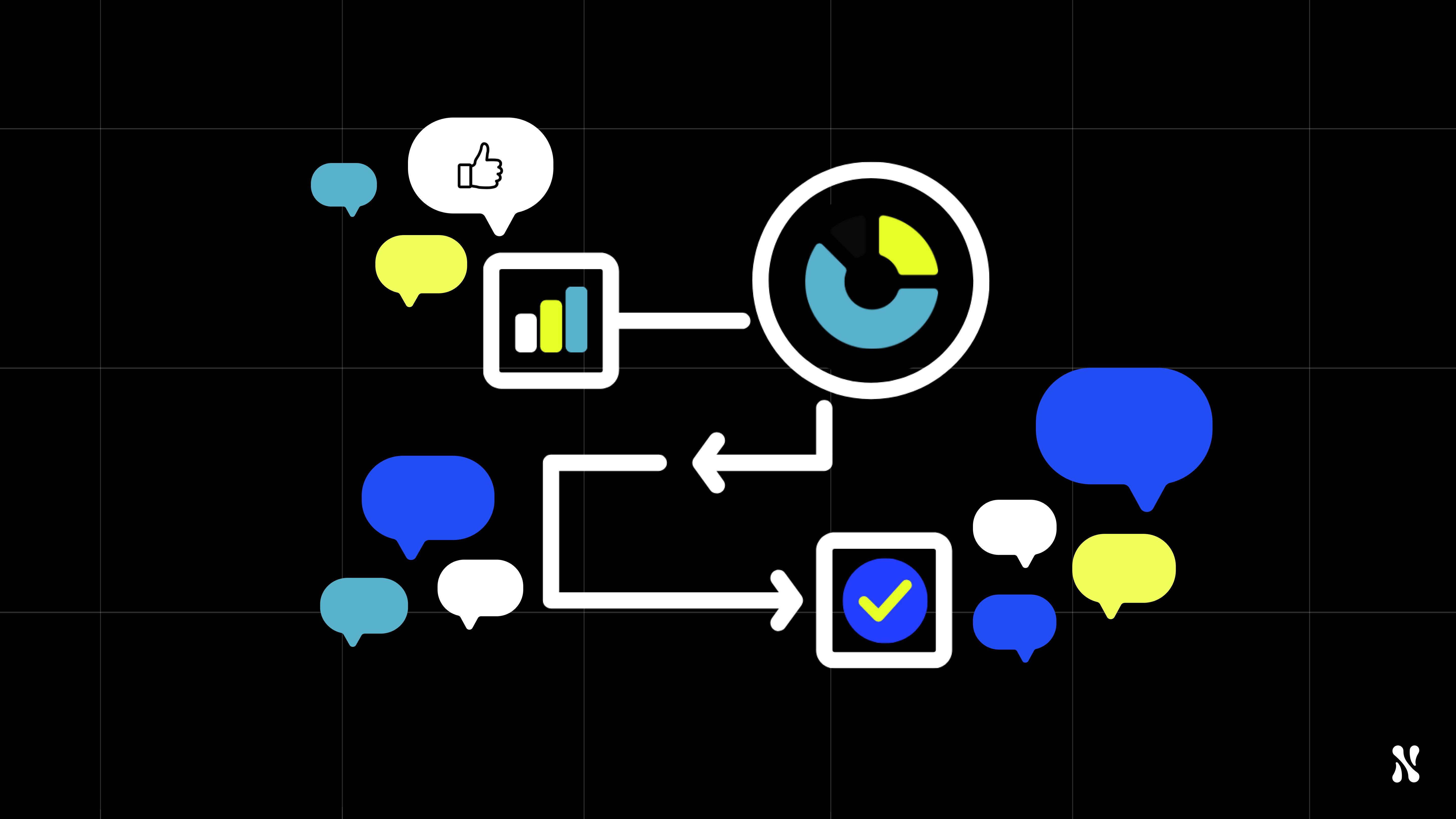

The LLM feedback loop is a cycle where feedback from users interacting with an LLM is collected, analyzed, and then used to improve the model's performance. This continuous process helps refine the model to ensure that it better meets user expectations and delivers more accurate, helpful responses. The main stages of this loop are:

- Product Availability: Make your LLM-powered product accessible to users.

- Feedback Collection: Gather explicit and implicit feedback from users.

- Model Improvement: Use feedback to adjust and improve the LLM, whether through system prompts, Retrieval-Augmented Generation (RAG), fine-tuning, or creating evaluation datasets.

Let’s break down these stages and understand how they contribute to the feedback loop.

Key Stages of the LLM Feedback Loop

1. Making Your LLM-powered Product Available

The first step in the feedback loop is deploying your LLM model into production. Whether it’s a chatbot, virtual assistant, or another LLM-driven tool, the product must be accessible to users for real-world interactions. This stage provides the foundation for gathering feedback. In general LLM products should be in front of real users sooner than compared to traditional products. This is due to their nature of being nondeterministic,

2. Collecting Explicit and Implicit Feedback

Once your product is live, the next step is gathering user feedback. There are two main types of feedback:

- Explicit Feedback: This is the direct feedback users provide, such as thumbs-up or thumbs-down ratings, star ratings, or feedback forms. For instance, after an interaction, users might be asked to rate how helpful the LLM’s response was. However, explicit feedback is rare—less than 1% of interactions yield this type of feedback, making it insufficient as the sole basis for evaluation.

- Implicit Feedback: This type of feedback is inferred from user behavior, such as response time, follow-up questions, or even actions like copying and pasting responses. Implicit feedback is far more abundant and can provide valuable insights into how well the LLM is performing.

By combining both explicit and implicit feedback, you create a more holistic understanding of how users are interacting with your LLM-powered product.

3. Improving the LLM

Once feedback is collected, it’s time to implement changes to enhance the model. Here are four primary ways to improve your LLM:

- System Prompts: These are changes made to the prompt structure or guidance given to the LLM. Modifying system prompts is the easiest and most immediate way to tweak responses.

- Retrieval-Augmented Generation (RAG): In RAG, the model uses an external knowledge base to retrieve relevant information before generating a response. Implementing RAG can significantly improve factual accuracy but requires more complex infrastructure.

- Fine-tuning: This involves training the LLM further using new data to adapt it to specific use cases. Fine-tuning is more resource-intensive than modifying prompts or adding RAG but offers deeper customization.

- Evaluation Datasets: You can build a user rated evaluation dataset by using implicit user feedback. This process involves three steps: 1. Extracting Feedback 2. Clustering by User Intent 2. Using Feedback for Evaluation. Read more about the framework here.

A/B Test your changes. After making improvements, A/B test the changes to ensure they provide a better user experience. Tools like Nebuly can assist in managing this process, helping you automatically analyze user interactions, create feedback datasets, and measure the effectiveness of model improvements.

Conclusion: The Power of the LLM Feedback Loop

The LLM feedback loop—comprising product deployment, user feedback collection, and iterative model improvements—is essential for creating better, more user-friendly AI models. By tapping into both explicit and implicit feedback, and using tools like Nebuly to streamline the process, you can ensure that your LLM product continuously evolves to meet user needs.

Nebuly is designed to help you manage the entire LLM feedback loop, from gathering user insights to implementing and testing improvements. With the right feedback loop in place, your LLM-powered product will not only meet expectations but continuously exceed them.

If you’d like to learn more about Nebuly, please request a demo here.